Current AI Data Privacy Landscape

A comparison of data privacy practices across major AI providers—OpenAI, Anthropic, Google, and DeepSeek—covering training data usage, retention, HIPAA compliance, and user controls.

Data privacy concerns consistently come up in my conversations with companies weighing AI adoption. I've compiled this review of OpenAI, Anthropic, Google, and DeepSeek to clarify the current landscape and facilitate these discussions. The bottom line: regardless of how stringent your requirements, viable options exist.

This chart compares the privacy practices of leading large language model (LLM) providers—OpenAI, Anthropic, Google, and DeepSeek—across their consumer, paid, enterprise, and self-hosted offerings. Key concerns addressed include whether user data is used for model training by default, availability of opt-outs, data retention timelines, HIPAA eligibility, and jurisdictional control over user data. For privacy-conscious users or organizations, enterprise-tier services from OpenAI, Anthropic, and Google Cloud (Vertex AI) provide the most robust protections, including no training use by default, short retention windows, and regulatory compliance options like HIPAA BAAs. In contrast, DeepSeek’s hosted offering poses significant privacy risks, including lack of opt-out, indefinite data retention, and data storage in China. For the highest level of privacy, self-hosting open-source models remains the safest route, assuming the user has infrastructure to support it.

Privacy Practices Across LLM Providers (Mar 2025)

| Provider | Tier | Data Used for Training (Default) | Opt-Out Available | Retention Duration | HIPAA Eligible | Notes |

|---|---|---|---|---|---|---|

| OpenAI | ChatGPT Free/Plus | Yes | Yes | Indefinite unless opted-out; 30 days in Temp Chat | No | Opt out in settings; Temp Chat deletes after 30 days |

| API / Enterprise / Azure | No | Not needed | 30 days (shorter if requested) | Yes | Azure-hosted data never sent to OpenAI; BAAs available | |

| Anthropic | Claude.ai Free / Pro | No | Not needed | 30 days | No | Data flagged by T&S may be kept up to 2 yrs; feedback up to 10 yrs |

| API / Enterprise | No | Not needed | 30 days (zero-retention optional) | Yes | Zero retention config for enterprise with BAA | |

| Bard (Consumer Gemini) | Yes | Yes | Indefinitely with history on; ~72 hrs with history off | No | Opt out via "Gemini Apps Activity" toggle | |

| Vertex AI / Cloud API | No | Not needed | Short/transient; enterprise-defined | Yes | Vertex AI covered under HIPAA BAA; strict enterprise data controls | |

| DeepSeek | Chat Service (Cloud) | Yes | No | Unspecified; stored in China | No | All data used for training; high surveillance/privacy risk |

| Self-hosted OSS Model | No (you control) | Not applicable | You control | You control | Only safe privacy option; requires internal infrastructure |

Note: HIPAA Eligible refers to whether the provider offers a Business Associate Agreement (BAA)—a contract required under U.S. law for handling protected health information (PHI). A BAA commits the provider to HIPAA-compliant data safeguards and breach protocols.

PHI: Protected Health Information—any individually identifiable health data (e.g., names, dates, diagnoses, test results) governed by HIPAA in healthcare contexts.

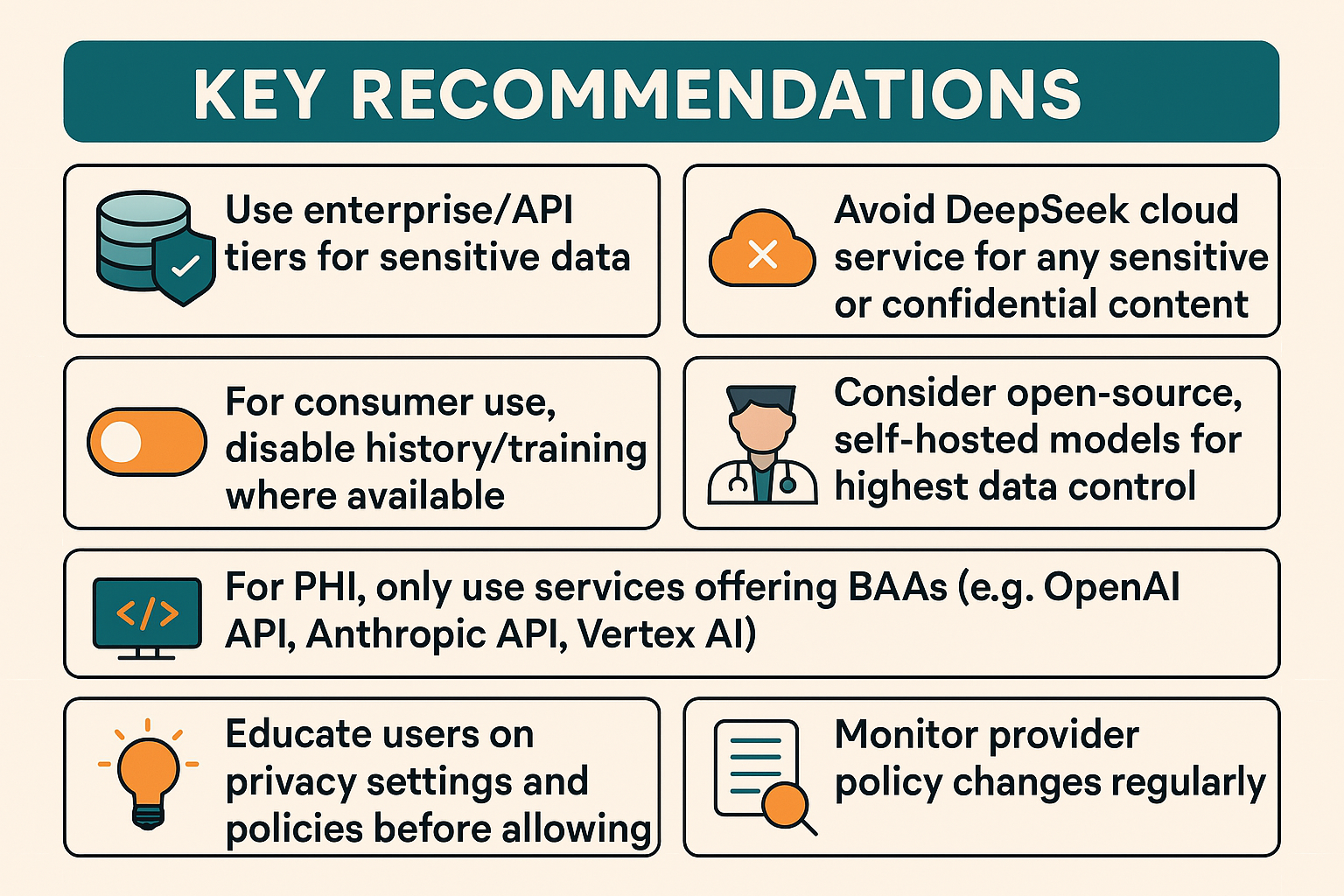

Key Recommendations:

- Use enterprise/API tiers for sensitive data.

- For consumer use, disable history/training where available.

- Avoid DeepSeek cloud service for any sensitive or confidential content.

- For PHI, only use services offering BAAs (e.g., OpenAI API, Anthropic API, Vertex AI).

- Consider open-source, self-hosted models for highest data control.

- Educate users on privacy settings and policies before allowing tool use.

- Monitor provider policy changes regularly.

Full report: