Building Your Personal Data Pipeline: Taking Control of Your AI Context

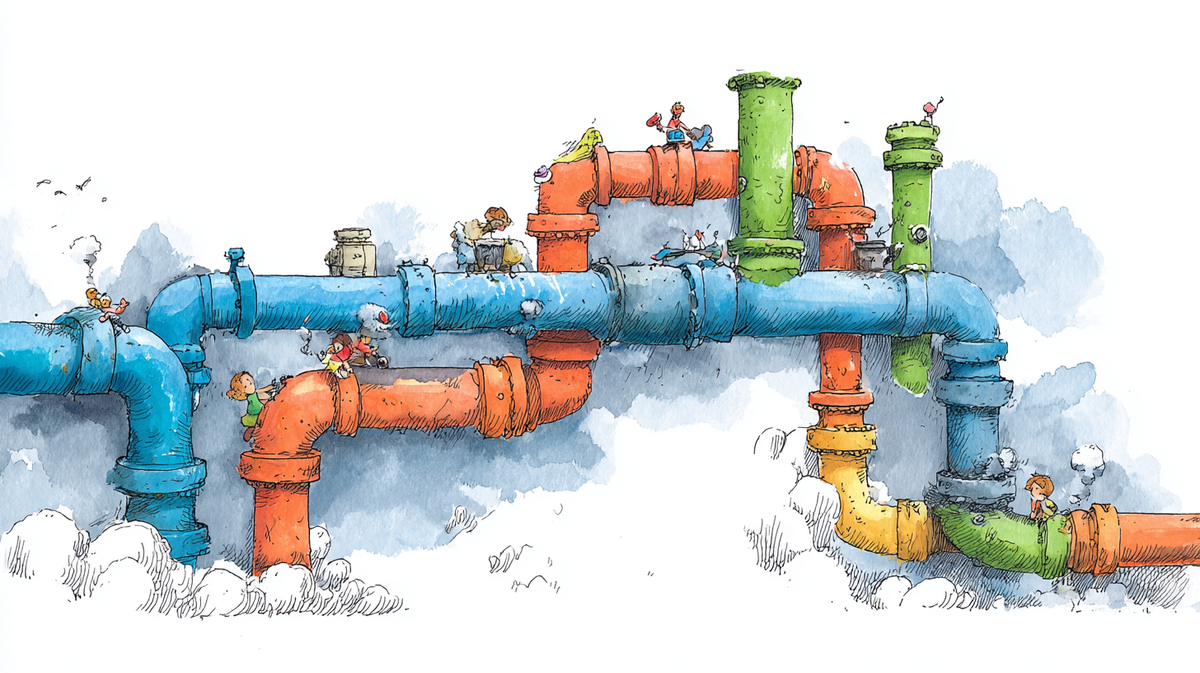

Companies leverage proprietary data for AI advantage. As individuals, we should too. I'm building my own personal data pipeline to make all my scattered context (notes, conversations, insights) searchable and mine. Here's why you should consider doing the same.

Every important conversation you've had, every strategic decision you've documented, every lesson learned from what worked and what didn't: this context shapes your professional judgment. But when you need it most, it's scattered across tools, buried in email threads, or trapped in someone else's system.

Consider a CEO preparing for a board meeting who needs to reference all customer feedback about pricing strategy from the past year, or a sales manager trying to understand which objections consistently come up with enterprise clients. The information exists, but accessing it requires manual archaeology across multiple platforms.

By now, it's clear that context is everything when working with AI. OpenAI's rolling out memory features, "context engineering" is becoming a buzzword, and companies are discovering that their competitive edge lies in leveraging proprietary data to provide context that creates something no one else can replicate.

What we're missing is that we as individuals should be leveraging this too. As individuals, we also have proprietary data.

Your Data Is Your Competitive Advantage

Just like companies, we each have a wealth of personal, proprietary information. My thoughts, my meeting notes, my past experiences, the things I've written, the conversations I've had: these are all threads I can pull on to create something that, even when aided by AI, remains uniquely mine and carries my voice.

Consider the professional scenarios where this matters:

For Sales Leaders: Customer objections follow patterns, but they're scattered across call transcripts, CRM notes, and post-meeting debriefs. Instead of relying on memory or asking "What usually works with enterprise clients?", you can query: "Show me all pricing objections from Fortune 500 prospects in the last six months and how we addressed them." The system connects successful strategies across different deals and timeframes.

For Marketing Managers: Campaign performance insights are buried across analytics dashboards, team retrospectives, and executive reports. When planning the next quarter's strategy, you need more than last month's metrics; you need to understand how messaging evolved, which audience segments responded over time, and what external factors influenced results.

For Engineering Managers: When planning sprint retrospectives, instead of asking "What went wrong last quarter?" you can query: "Show me all technical debt discussions, blocked dependencies, and team capacity concerns from Q3." The system surfaces not just meeting notes, but Slack threads, architecture decisions, and post-mortems, giving you the full context to lead more effective discussions.

Even with company resources and tools, these scenarios require pulling together information that spans multiple systems and often includes your personal notes, observations, and informal conversations that never make it into official documentation. The difference is that in the past, we needed to depend on big tech companies (Google, Facebook, whatever) to synthesize our data. We couldn't do this magic individually because we didn't have the tools. Now? Listen, what I'm contemplating is hardly going to be perfect but as I sit here on my couch in my sweats with my laptop in my lap I feel really confident that I can get anywhere from 60-80% utility out of this with a couple days work. That statement is INSANE.

Why Vendor Memory Features Fall Short

OpenAI's memory feature offers an easy solution for people who aren't ready to manually manage their context. ChatGPT offers to decide what is important enough to persist in memory and retrieve at the appropriate time. And, to be fair, this is better than the alternative. They are trying to solve the product problem of people getting mediocre outputs because they don't know how to set the interaction up for success.

I see how that's good for OpenAI and I see how that's good for some users. However, those outcomes are always going to be worse than the scenario where someone can thoughtfully and strategically manage their context. ChatGPT is going to remember where you work and what you do. It's never going to retrieve a full meeting transcript when you want to author a follow-up email to a potential client. That's not how the implicit context management works.

The companies that already have robust ecosystems of apps and data will clearly provide this. If you are in the Google world, your emails are there, your calendars are there, your docs are there: spreadsheets, presentations, all of it. It makes a ton of sense that once it is economically viable (never mind technically, it's already technically viable), they will provide this service. Similarly to Microsoft, if you are a Microsoft shop, everything's on Microsoft, and they will provide this eventually. Additionally, both ChatGPT and Claude are allowing people to add integrations that bring individual data sources into scope. If those data sources cover your needs, these integrations could work for you too. But your whole world better be in there; otherwise, you're still going to need to pull some stuff together.

If vendor solutions are the best you have available, they're solid options. But we can do better.

Building Your Own: My Approach

This project has been the subject of a lot of thought and discussion. I've been talking about this for maybe a year. It wasn't until I went to an unconference this past Saturday and saw Danny Wen's implementation that I realized I could start small and create something genuinely useful.

At its core, I want an accessible repository of "everything:" things I've written and created, my meeting notes, interesting discoveries from the internet, my AI conversations, the outputs I've created, and the prompts I used to create them. All connected, all searchable, all mine. When I say "everything" I mean all types of documents and data. I don't have any intention to pipe every single email or text message into this thing. I don't want that and I think it would create a worse experience.

Everything Becomes Markdown

Everything becomes a Markdown file. Markdown is the native file format of AI, which means any model can read, process, and understand the content without translation layers. More importantly, it's an extremely efficient way to capture formatting and structure (headers, lists, emphasis, links) using minimal tokens. Since AI models are still constrained by context windows, this efficiency matters when you're feeding large amounts of data into queries.

I'm using Obsidian as the repository because Obsidian vaults are just directories of Markdown files on your filesystem. No proprietary database, no vendor lock-in. If Obsidian disappears tomorrow, I still have a folder full of standard Markdown files that any tool can read.

The Workflow

The process has three stages:

Input: Save or convert content to Markdown files. This means developing new habits: instead of bookmarking that insightful LinkedIn post, I save it as a Markdown file with proper metadata. Instead of leaving meeting notes scattered across different apps, everything gets converted and filed.

Pre-processing: Each document gets tagged and enriched with metadata before entering the repository. Date, source, topic tags, people involved: whatever makes it findable later. A local AI model can help with this, automatically extracting key entities and suggesting relevant tags.

Querying: This is where it gets interesting. Direct queries like "show me all meeting notes from last week" are straightforward. Semantic queries like "what have I written about the value of context in AI?" require more sophisticated retrieval. The goal is to surface not just exact matches, but conceptually related content across different timeframes and formats.

Local vs. Cloud: Querying Approaches

For the querying mechanism, I'm exploring several approaches. A local model running on my machine could handle both pre-processing and retrieval, keeping everything private and under my control. Alternatively, I could build a bridge (an MCP server) that gives Claude access to my local data store, letting me leverage cloud models on my existing subscription while maintaining data ownership.

The beauty is that even if the sophisticated querying falls short initially, a well-organized Obsidian vault that centralizes all my documents would still provide significant value. I can search manually, browse by tags, or use Obsidian's built-in graph view to discover connections. The tools will only get better from here.

The Real Challenge: Building Habits

The real challenge isn't technical: it's developing sustainable capture habits. This means browser extensions for saving web content, mobile workflows for voice memos, automated exports from tools like Notion or Slack, and integrations with the tools I already use. The system only works if feeding it becomes as natural as bookmarking or taking screenshots.

Yes, this requires some initial setup time and ongoing discipline. But consider what you're gaining: no more "I know I wrote something about this somewhere" moments. No more rebuilding context from scratch when switching projects. No more forgetting the reasoning behind critical decisions.

This is less a product and more a toolkit: a recipe that depends entirely on the specific data sources in your life. But that's exactly the point.

Why This Matters Now

We're at an inflection point where individuals can finally take control of their own data and use it to create something genuinely valuable. The companies will provide services that work within their walled gardens but that's no longer our only option.

When this approach becomes common, it changes professional dynamics. Meetings become more substantive when participants can instantly access relevant prior discussions. Technical decisions get better documentation because the system makes retrieval effortless. Knowledge transfer improves because institutional memory becomes queryable rather than tribal.

I was recently talking to a CEO about AI adoption at his company. The point I kept trying to reinforce is that replacing individual actions with AI isn't the real opportunity. We can now reimagine what the complete system looks like: perfect knowledge transfer, institutional memory that doesn't walk out the door, decisions informed by the full history of relevant discussions.

The future belongs to those who own and leverage their own context. I'm starting to get more thoughtful about building mine.

For Companies

If your company isn't already bringing together all of its documents and data streams to leverage in this way, you should. For all the reasons I've stated, there's no reason you should struggle to onboard a new team member, or have to work to sync people up on progress or status, or have anyone in customer support not know or not have access to the latest documentation on a product rollout. This is now a solved problem.

Are you happy with the existing offerings or would you like your own data pipeline?